AWS A2I with Textract

Contents

- - Introduction

- - Create S3 bucket

- - Create a Work Team

- - Create a Human Review Workflow

- - Start a Human Loop

- - View Human Loop Status and get Output Data

- - Conclusion

Introduction

Nowadays we cannot imagine the modern world without machine learning technologies. We can face it anywhere, for example virtual assistants such as Google Assistant, Apple’s Siri or Amazon’s Alexa, personalization and recommendation systems or self-driving cars. The most popular use cases of machine learning are data analysis and predictions.

Sometimes machine learning predictions don’t give required accuracy and if we want to get high-precision results, we have to make additional verification. It can be implemented by AWS A2I. It allows you to implement human review of low confidence machine learning predictions or a random sample of predictions.

For getting started with service AWS created a very convenient demo. The demo gives you the option to use Augmented AI with Amazon Textract for document review or Amazon Rekognition for image content review.

We decided to test A2I with Amazon Textract and find out its advantages and disadvantages.

Step 1: Creating S3 bucket

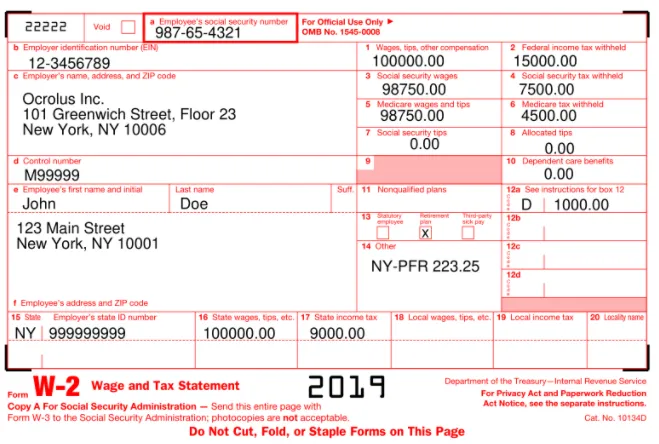

First of all we create an S3 bucket where the sample document is placed. Pay attention that the document must be in JPEG or PNG format. Bucket has to be created in the same AWS Region as the workflow.

You need to configure your CORS setting in the bucket to avoid access problems.

[{

"AllowedHeaders": [],

"AllowedMethods": ["GET"],

"AllowedOrigins": ["*"],

"ExposeHeaders": []

}]Step 2: Create a Work Team

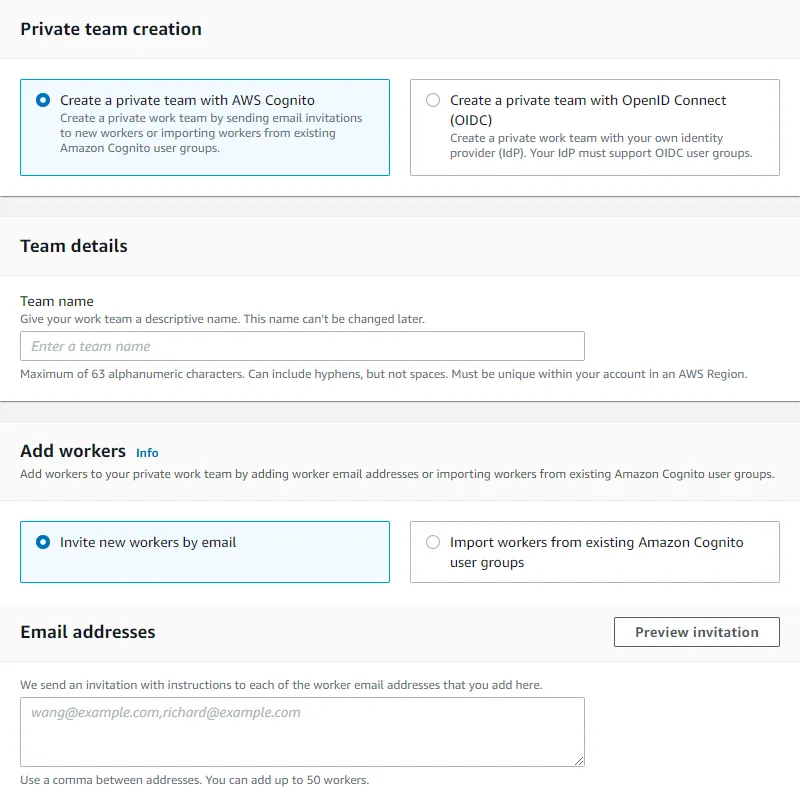

Here we are creating a private team using Amazon A2I console. In the navigation pane, choose Labeling workforces under Ground Truth. We can create our team with AWS Cognito and invite workers by email or OpenID Connect. For this case we use the first variant. Another thing of notice, there is opportunity to create an Amazon Mechanical Turk team summary or find some Vendor workforce.

Step 3: Create a Human Review Workflow

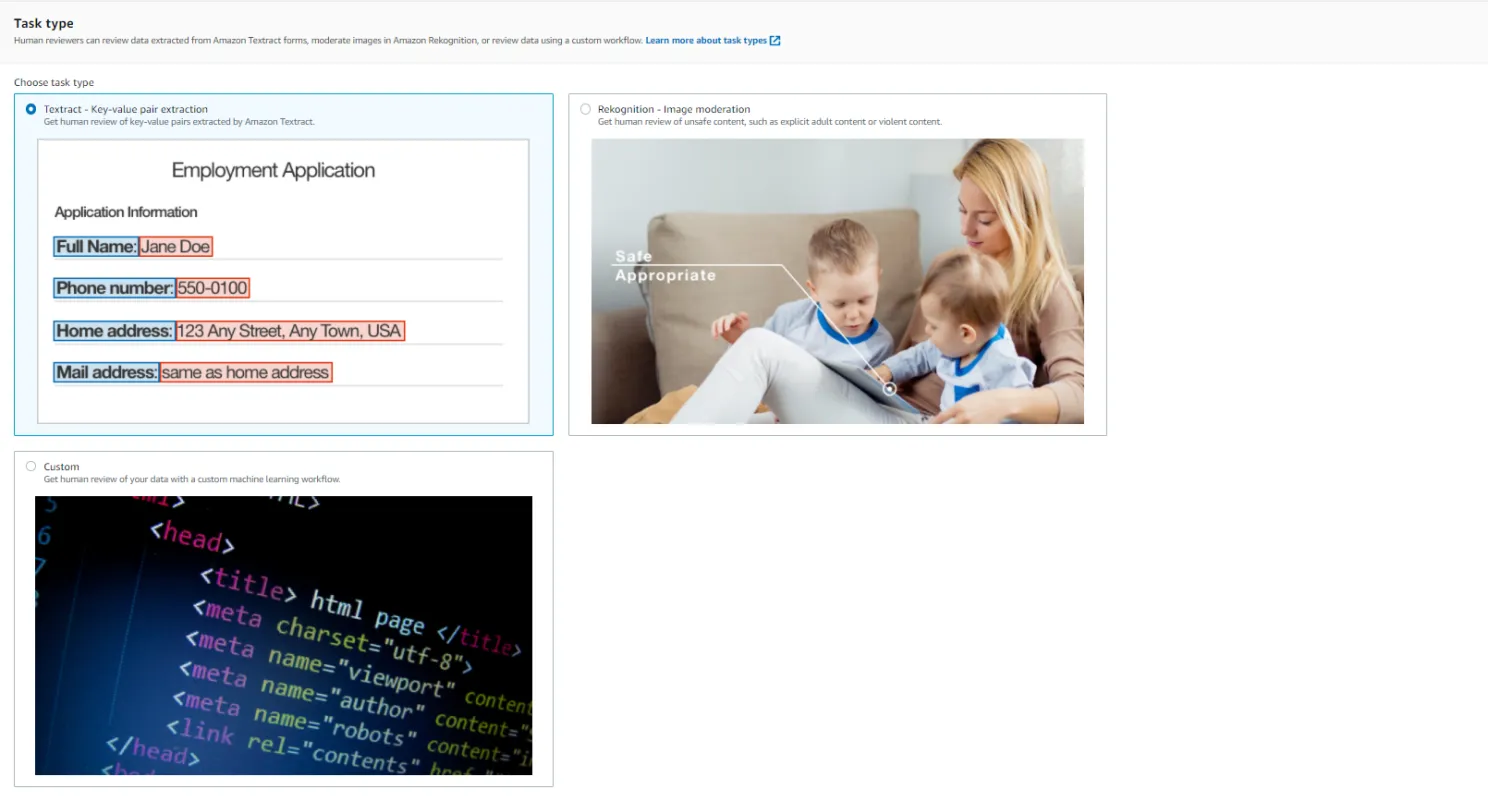

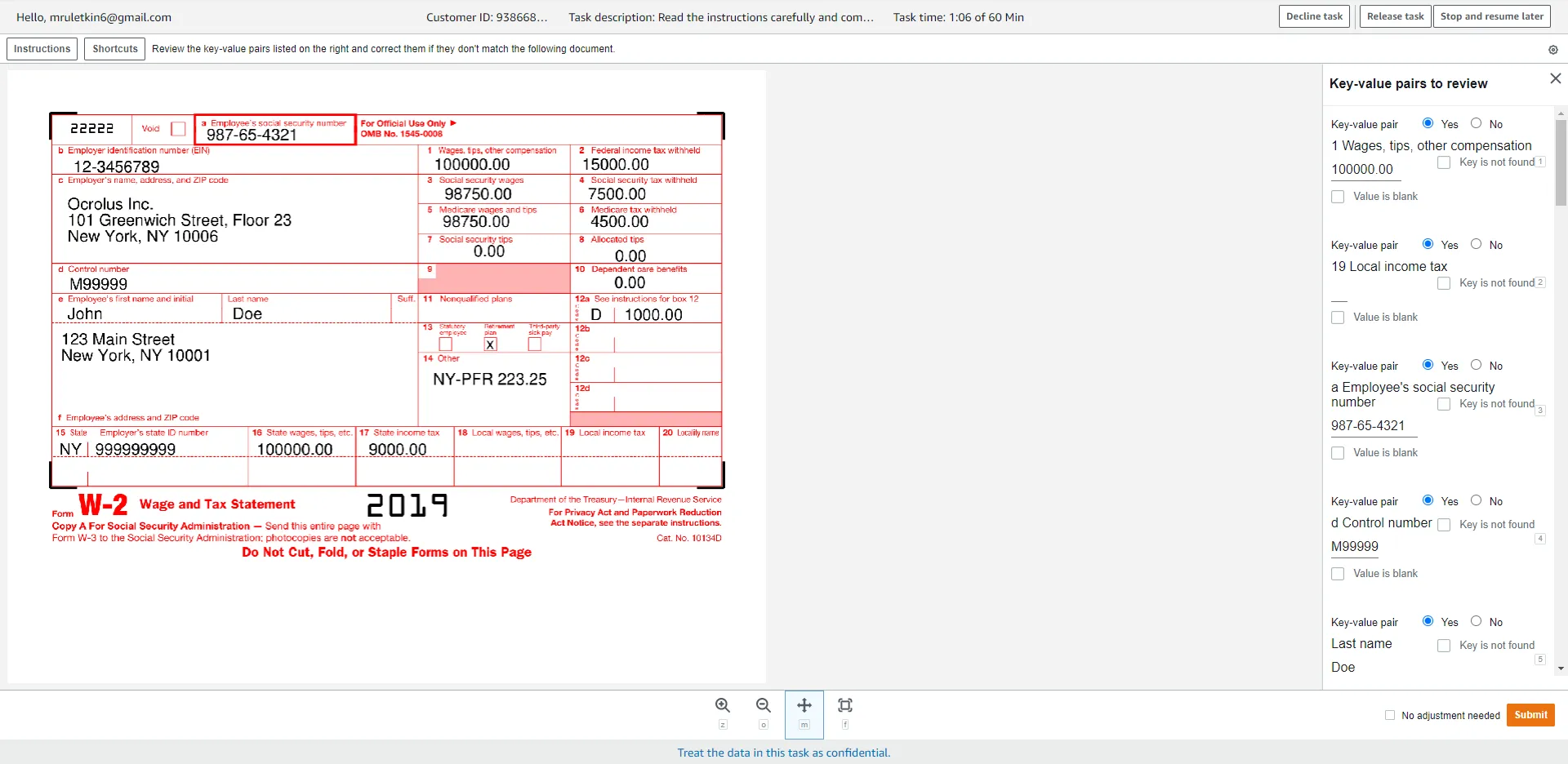

Now we create our Human Review Workflow by choosing the Human review workflows page under Augmented AI. In the settings we select the task type. We can choose Textract- key-value pair extraction, Rekognition- image moderation or Custom machine learning workflow.

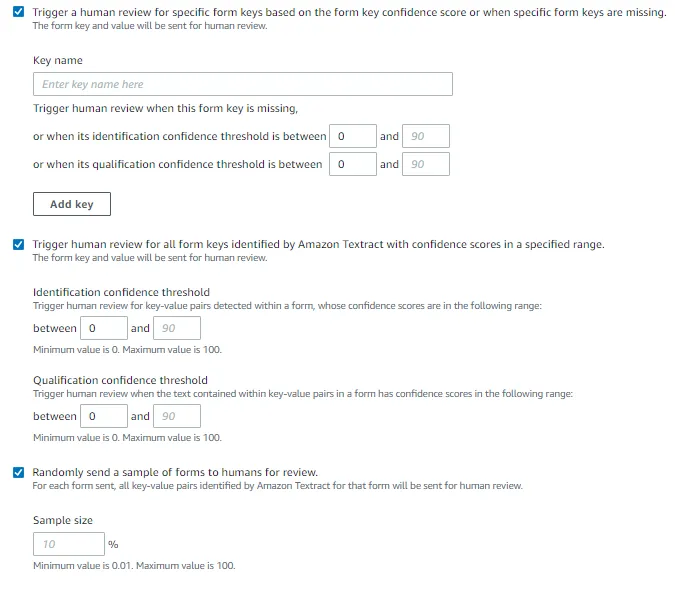

Then define conditions when a human review should be triggered. Human review can be invoked for specific form keys based on the form key confidence score or when specific form keys are missing, for all form keys identified by Textract with confidence scores in a specified range or for a random sample of forms.

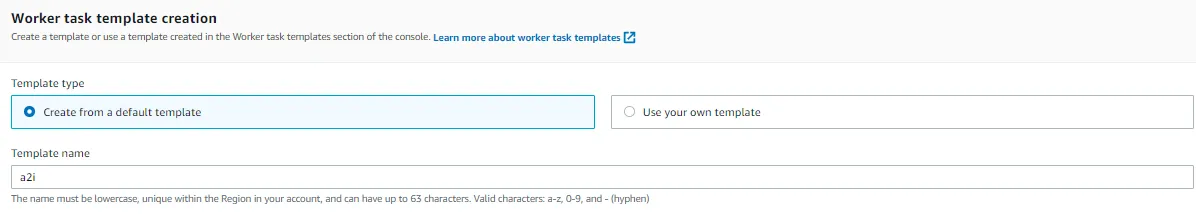

Then we’re on to the creation of the Worker task template. For the first time we create from a default template for Textract. If you will create another Human Review Workflow you can use it again.

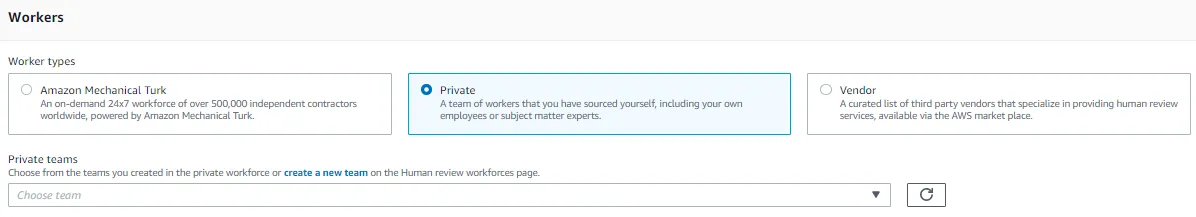

The last step for creating a Human Review Workflow is to choose Workers. We select a private team created in the second step. There is also an opportunity to use Amazon Mechanical Turk, a crowdsourcing marketplace or some Vendor.

Step 4: Start a Human Loop

Finally we can start our human loop with API operation AnalyzeDocument. This could be done in two ways: by using AWS SDK or AWS CLI. For this case we will use AWS SDK for Python (Boto3).

We will start two loops consistently using different images. First image contains key-value pairs, unlike the second. This way we can check the adequacy of A2I document analysis.

Below is an example of the code to start our human loop.

import boto3

client = boto3.client('textract')

response = client.analyze_document(

Document={

"S3Object": {

"Bucket": "DOC-EXAMPLE-BUCKET",

"Name": "document-name.jpg"

}

},

HumanLoopConfig={

"FlowDefinitionArn": "arn:aws:sagemaker:us-west-2:111122223333:flow-definition/flow-definition-name",

"HumanLoopName": "human-loop-name",

"DataAttributes": {

ContentClassifiers: ["FreeOfPersonallyIdentifiableInformation" | "FreeOfAdultContent"]

}

},

FeatureTypes=["TABLES", "FORMS"])

Step 5: View Human Loop Status and get Output Data

As soon as Human Loop is started we have an opportunity to monitor its status in the Amazon A2I console. If human intervention is required, the task will be created and we can link to our labeling project and perform the task. When the task is completed we can see output data in a JSON file saved in the bucket.

As we can see A2I decided to start only one human loop. This leads us to the conclusion that service absolutely correctly determines the document that requires human review.

Conclusion

We created a test case for A2I with Amazon Textract to show its functionality. Humans review single-page documents to review important form key-value pairs, or have Amazon Textract randomly sample and send documents from your dataset to humans for review. This machine learning model is able to make simple analyses and start human review if it’s really required. So Amazon A2I in conjunction with Amazon Textract might be a good solution for not difficult operations for saving time.